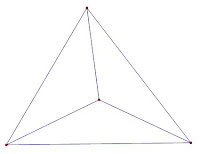

To the right we see a

planar graph. It divides the plane into 5 regions which I have labeled A, B, C, D and E. We call region D a triangle because it has three sides. Regions B, C and D are quadrilaterals since they have 4 sides. Even though A is an infinite region in the plane, it has 3 sides and is called a triangle. The question we address here is whether we can draw planar graphs with all possible combinations of triangles, squares, pentagons, etc., or not. Also, can we determine which combinations are possible?

We will use the

Euler Characteristic for the sphere to solve this problem. I know it looks like the figure above is drawn on the plane, but you can also think of it as drawn on a relatively flat part of a sphere. In this way, region A is not infinite, but we will still call the regions triangles, quadrilaterals, pentagons, etc., even though they now have a curve to them. The Euler Characteristic is

V –

E +

R, where

V = number of vertices,

E = number of edges (not the region E above) and

R = number of regions. The Euler Characteristic depends only on the surface on which the planar graph is drawn, and not the shape of the graph. For the sphere,

V – E + R = 2,

always. In the example at the above,

V –

E +

R = 6 – 9 + 5 = 2.

Let’s do a little counting. In the figure above,

E = 9 is the number of edges. However, if we count the sides of the polygons we get 2 triangles x 3 sides each + 3 quadrilaterals x 4 sides each = 18 sides. This is twice as many as the edges, because polygon each side is counted twice for each edge, once for the polygon on one side of the edge and once for the polygon on the other side of the edge. For instance, in the edge count,

E, the edge

xy is counted for the triangle D and the quadrilateral C.

What about the vertices? In the figure above

V = 6is the number of vertices. If we count the vertices of the polygons we get 2 triangles x 3 vertices each + 3 quadrilaterals x 4 vertices each = 18. In this case, we get three times as many polygon vertices as graph vertices because there are three polygons meeting at each vertex. For example, polygons A, B and C meet at vertex

t.

These counting techniques and the Euler Characteristic will give us a system of equations for finding whether graphs with certain combinations of polygons are possible.

Example 1: Can we draw a planar graph with only triangles so that exactly three triangles meet at each vertex? If so, how many triangles will there be? We can answer this question with a system of linear equations. The first equation is the Euler Characteristic for the sphere:

V – E + R = 2.

Each region is 3 sided, but if we count 3R sides that will be double the edges since each side is counted twice:

3R = 2E.

Each region has 3 vertices, but if we count 3

R vertices that will be triple the total vertices since three triangles meet at each vertex:

3

R = 3

V.

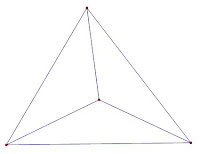

Solve this square system of 3 equations in 3 unknowns using your favorite method, and we find there is only one way to do this:

V = 4, E = 6 and R = 4.

The only solution is to have 4 triangles, 4 vertices and 6 edges as shown on the right, remembering that the outside region is a triangle. So, we could never draw a graph that had 5 triangles such that 3 triangles meet at each vertex. Give it a try to see why it can't be done.

Questions: Can we draw a planar graph of triangles where 4 triangles meet at each vertex? 5 triangles meet at each vertex? 6 triangles meet at each vertex? How would we change the system above to answer these questions. If the graph exists, try to draw it.

Example 2: What if there are two different types of polygons? Consider a graph of triangles and quadrilaterals, assuming that three polygons meet at each vertex. We will introduce two new variables:

T and

Q, the counts of the triangles and quadrilaterals, respectively. Now, the total number of regions is the sum of the two types of polygons,

T + Q = R.

Count the edges, 3 for each triangle and 4 for each quadrilateral, and as in Example 1, this counts each edge twice:

3T + 4Q = 2E.

Count the vertices, 3 for each triangle and 4 for each quadrilateral, and as in Example 1, this counts each vertex thrice, because 3 polygons meet at each vertex:

3T + 4Q = 3V.

Finally, we need the Euler Characteristic:

V – E + R = 2.

This time we’ll use a matrix and row reduction to get the solution. The system is underdetermined, so we expect to get infinitely many solutions.

Sure enough, we have a free variable and we can write the general solution as

T = 12 – 2R,

Q = –12 + 3R,

V = –4 + 2R and

E = –6 + 3R.

But in this application, the values of

T,

Q,

V and

E have physical meaning and must be positive. If

V or

E is zero, then the graph would be empty. We could assume that

T or

Q is zero, but we are interested in graphs with both triangles and squares. Now we can solve the inequalities below to see if there is a viable solution, and how many there are.

T = 12 – 2R > 0 => R < 6

Q = –12 + 3R > 0 => R > 2

V = –4 + 2R > 0 => R > 2

E = –6 + 3R > 0 => R > 4

Okay,

R is an integer and strictly between 4 <

R < 6, so

R = 5 is the only realistic solution to this underdetermined system. Now,

R = 5, T = 2, Q = 3, V = 6 and E = 9.

Draw this graph (don’t forget that the outside region is one of the 5 regions and must be either a quadrilateral or a triangle). The graph is at the bottom of this blog, but don’t peak before you give it a try.

Questions:

1. Can you draw a planar graph with pentagons and hexagons such that three polygons meet at each vertex? If so, how many of each polygon are there? Can you draw them?

2. Can you draw a planar graph with triangles and quadrilaterals such that four polygons meet at each vertex? I have written the equations and solved the system for this case, and this may have infinitely many solutions, but I haven’t had the time to draw more than two of the solutions and would like to see an algorithm for drawing all of them.

3. Other surfaces, such as a torus (donut) have different Euler Characteristics. How does one draw a graph on a torus? What are the solutions to the questions above if the graphs live on a torus?

Wolfram MathWorld has a list of the Euler Characteristics for surfaces, but

WikiPedia has nice images of those surfaces if you scroll to the bottom of the article.

To limit this blog to a few pages, a lot is left unsaid. But again, these posts aren’t meant to give an in-depth discussion of the topic, but just an introduction. Go exploring for more about this topic.

Reference: Alain M. Robert,

An Approach of Linear Algebra through Examples and Applications